M.017 Shadows of trouble

AI Work Part 2: AI thinking in context

You are reading Molekyl, finally unfinished ideas on strategy, creativity and technology. Subscribe here to get new posts in your inbox.

When I was younger, I was an avid sleepwalker. I usually didn’t realize it when it happened and had to be told about it later by others. But I do remember a few episodes where I woke up in the middle of the experience. And it was the strangest thing.

To illustrate: twice during my late teens I woke up in the shower, in the middle of the night, with shampoo in my hair.

Highly confusing at the time. Very fun in retrospect. But also fascinating (and a bit scary) to think about all the decisions I made without being in any way cognitively present.

I got out of bed. Navigated out of my room, up a few stairs, through the a dark living room, down some other stairs, opened the bathroom door, turned on lights, locked the door (one of the two times I also hid the key!), got undressed, turned on the water, adjusted the temperature, and started washing my hair. All without being aware of making any of these decisions.

I apparently stopped walking in my sleep in my early 20s, and haven’t thought much about it since. Until recently when I came to think of how similar sleepwalking is to how many use AI: suddenly waking up to an AI having produced something without remembering or understanding the decisions and thinking that got us an there.

At the same time as cognitive sleepwalking with AI is becoming a bigger concern, productive use of AI for thinking tasks becomes a bigger opportunity. Knowing when and how to stay awake, when it’s fine to sleepwalk in the shadows of AI, and how to consciously shift between different uses, suddenly becomes one of those new skills we should talk more about. To do so, we need to first look into the different ways in which AI can be used for thinking tasks.

Thinking with AI

In my last post, I explored potential consequences of AI work as a new mode of work. Work is about thinking and doing to achieve something, meaning that AI work should be about AI thinking and AI doing.

Since thinking affects doing, and doing affects thinking is often practically difficult to separate the two. But it still makes much sense to do just that to further explore the different modes of AI work. Here focusing on its thinking component.

In this context, AI thinking is not about thinking machines, but about how AI can be used to solve tasks usually requiring human thinking. Like problems related to learning, coming up with new ideas, analyzing a situation, making a decision, synthesizing information and more.

Viewed this way, AI thinking can both substitute and complement human thinking. When it’s a substitute, its used to solve tasks that humans normally would solve with thinking. When it’s a complement, it enhances a human’s innate thinking abilities one way or another.

While the cognitive sleepwalking problem is primarily nested within the “AI as a substitute bucket”, this doesn’t necessarily mean that all substitute use of AI for thinking tasks are bad. Our that all complement use are good.

To understand when sleepwalking is an issue, and what we can do to avoid ending there, we need to also counter in another dimension: the level of cognitive agency of the human in the loop.

The Four Modes of AI Thinking

When I was walking around in my sleep taking showers, my agency was low as my unconscious brain made its own decisions on my behalf. When using AI for thinking tasks, human agency might vary quite a lot depending on both the user and the task at hand.

With high human agency, a person of flesh and blood is the orchestrator that control the direction, pace, and focus of the thinking. What to think about, how to structure problems, how to weigh alternatives, and so forth.

With low human agency, the AI is driving the process. A human can still very much be involved, but follows the AI’s lead rather than driving the process themselves.

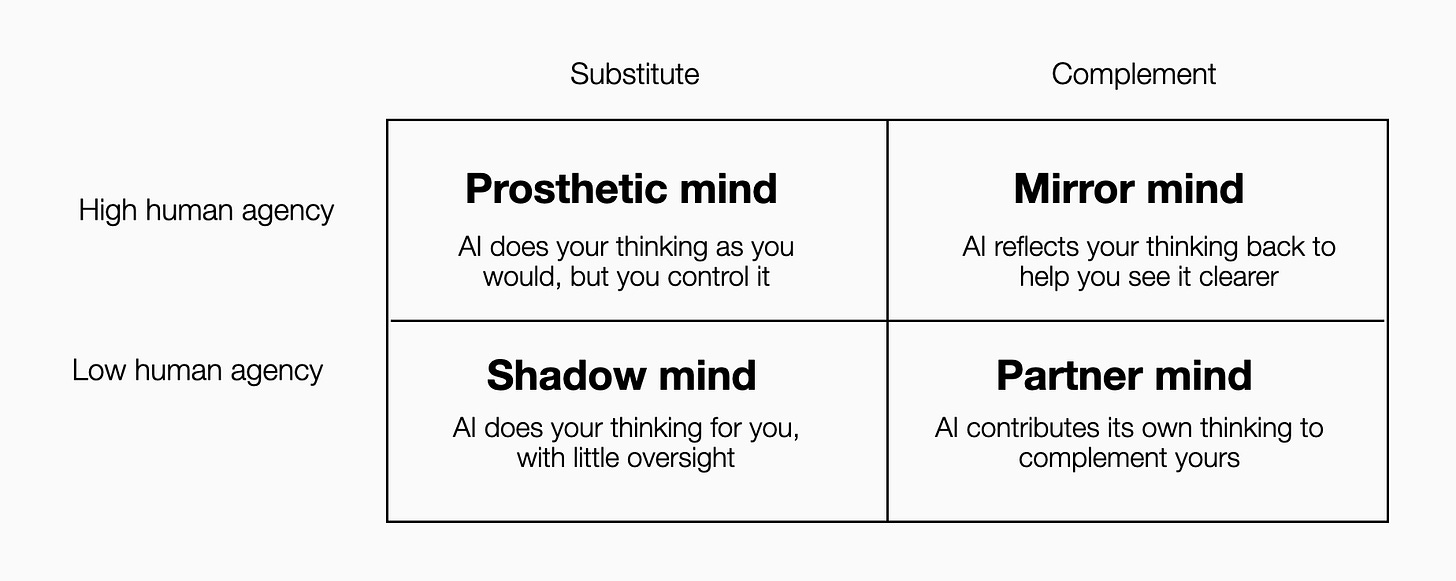

If we then combine the dimensions of human agency and substitute/complement, we get a neat map of four distinct modes of AI thinking:

One more prone to cognitive sleepwalking than the others. Three offering alternatives that keep you awake. Let’s have a look at each.

Shadow Mind

The mode most prone to cognitive sleepwalking is using AI as a shadow mind. Here, AI substitutes for our own thinking, while we maintain minimal agency over the direction or approach.

Efficient? Yes, no doubt. Just like it’s efficient to take the morning shower while you sleep.

Problematic? Also yes. Because it’s so easy to fall prey to the illusion that it’s us doing the thinking, when it really is the AI.

The root cause of the problem is that this is the easiest way to use AI. It’s the path of least resistance. We just tell an AI what we need, lean back, and watch output emerge. While a machine is doing the cognitive heavy lifting.

To exemplify, it would cost me way less sweat and tears to write my next Molekyl post using a shadow mind approach. I could just ask Claude to write a new post in the style of my previous posts. I could then make some adjustments here and there, and if it turned out good, I might even feel smart and authorial. After all, I gave it the task, and even looked over and revised the output, right?

I don’t write posts it this way for the simple reason that it wouldn’t be me who did the thinking. It would be the AI who decided which topic to write about, which patterns from my writing to use, what assumptions to make, which examples to pick, how to structure the narrative, and what conclusions to lift. The AI would become the thinking equivalent of the ghost writers who pen celebrity autobiographies. The final product appears to others and even the celebrity as a result of their own thinking, but really, the cognitive work was done by someone else.

While I deliberately shy away from using AI as shadow mind for work I care about, its easy to see why it’s seductive. Ease is one thing, but another is that it makes us feels productive. We produce something. We see real results. We get a little dopamine kick from “completing” or creating something cognitively demanding. Which can feel great.

And who can blame us? Having a machine solve problems that usually requires us to think is indeed magical. And its ease makes it the natural way for most to start getting something out of AI.

But riding the illusion of productivity masks the unpleasant truth that we have outsourced the actual thinking to the shadows. Which comes with its own set of problems.

In previous posts I have discussed problems like shallow learning, reduced distinctiveness, and trusting AI when you shouldn’t. But it can also harm our ability to think itself.

Already in the 1960s, people like psychologist and AI-pioneer Joseph Weizenbaum warned about how excessive reliance on computers for cognitive tasks could limit our thinking abilities. Consistently relying on AI to do the cognitive heavy lifting simply means less exercise for our cognitive muscles.

In the longer run, this might make it difficult for each of us to stand apart from other humans. And it might also weaken the things that set each of us apart from machines. Like having the ability to uniquely structure problems, develop original arguments, and create novel analytical approaches and ideas.

All this is concerning. And more so if we add that AI is becoming more and more helpful by the day, making it increasingly easy to sleepwalk through cognitive tasks. All while we might not even realize doing so ourselves.

Is there something we can do to wake up?

Stepping out of the Shadows

I think yes, and that the solutions are found in the three other modes of AI thinking. Each offering alternative takes on how AI can be used for thinking tasks. Let’s have a look.

Prosthetic Mind

Using AI as a prosthetic mind is the closest sibling to shadow mind, in the sense that it also use an AI to substitute for human thinking. The difference is that the agency is with the human.

Which is important.

In prosthetic mind mode, we deliberately delegate and specify thinking tasks to AI in ways that give us greater control over the decisions and judgment leading to an output. In a sense, the AI becomes a cognitive prosthetic. An artificial limb operating on our instructions, carrying out tasks we could have done ourselves, in the way we would have done it.

It could be to ask an AI to carry out an analysis according to your detailed specifications, structure an argument following your logical framework, or generate options within parameters you define.

The key characteristic is that we delegate and manage the AI in ways that lets us maintain agency over what gets analyzed and how, even though the AI is doing the actual cognitive work.

Prosthetic AI thinking is less efficient on a new task, but if scaled, the efficiency advantage of the shadows are quickly nilled. If I give an AI detailed instructions for how to do one strategic analysis like I would have done it, I can easily do as many such analyses as I want with almost no extra effort.

The crux of the prosthetic mind mode, however, is that effective delegation and management of an AI is a skill of the human in the loop. A point raised already in the 1960s by Herbert Simon.

A person with deep understanding of the problem, how it should be solved and an ability to precisely articulate this, will get better results than a person without these skills. The former will also be better at knowing which cognitive steps can be outsourced to AI, and understand what role human judgment needs to retain.

Using AI as a prosthetic mind keeps us awake, but it does come with some potential catches. One is that for many tasks, we might not have the unique skills, domain knowledge or insights required to make the final result better than an AI would have produced on its own. And less so by the day as AI improve.

Another catch, which is more a catch-22, is that by not occasionally doing the thinking tasks ourselves, the nuanced understanding needed to effectively delegate to AI might deteriorate over time. With the result that we decay back into shadow mind without really noticing.

Partner Mind

Where the first two modes are substitutes to human thinking, the last two are complements. What separates them is the level of human agency.

When we use AI as partner mind, the agency is more on the side of the AI. It could be as a knowledgeable AI tutor in learning situations, or an experienced AI coach that guides us through an analytical processes we don’t know. In ways that complement our own domain expertise, unique insights or ideas.

Partner mind is the AI thinking mode closest to collaborating with someone who has a different skill set than yourself. Done right, the AI becomes a thinking partner with its own distinct contributions and direction, complementing your own skills and competences.

A simple way to nudge an AI in this direction is to have it interview you (one question at a time) about everything it thinks it needs to help solve the problem. The AI help with asking questions, and you contribute with your views and expertise. With the benefit that you don’t have to think about what to think about yourself. From there, you can also prompt it to challenge your answers.

A well designed AI partner brings the outside perspective and structure that can complement our own creativity, intuition, and domain knowledge. Producing outcomes different from what each party could achieve in isolation.

While having clear benefits, using AI as a partner mind also comes with a caveat. It will always be at the mercy of whoever designed your AI partner. Whether it’s your own prompting of a bot, or AI tutoring modes from companies like Khan Academy or OpenAI.

A well-designed partner mind AI can be a powerful amplifier. A poorly designed partner AI can lead us astray without realizing it. Introducing a risk of sophisticated sleepwalking where we might do things right, without being able to assess if we do the right things.

Mirror Mind

The final AI thinking mode is when the AI serves as a complement to human thinking, while the agency is with the human. Where the partner mind is a source of direction, mirror mind is a source of reflection.

Using AI as a mirror mind might involve explaining your ideas and reasoning to AI and having it rephrase, give feedback, or identify logical gaps in your thinking. Not doing the thinking for us, but reflecting our own thoughts back in ways increases clarity on our thinking.

Having deep dialogues about our own ideas with someone that never gets bored or tries to change topic, allows us to hold thoughts for longer and to go deeper faster than we otherwise could. All while the written form keeps a paper trail we can pick up on later, preventing fleeting ideas from getting away.

When using LLMs this way, I always prompt it to never attempt to steer the conversation with questions, to give short replies, to reflect my thinking back at me, and to never tackle more than one point in each interaction. All to curb the AI’s tendency to be too helpful, jump to conclusions, distracting with questions or give too long answers.

The result is creative dialogues resembling chats with a smart person over a beer or coffee. Spontaneous, explorative, patient, and focused.

While the mirror mode is by far the most human of the four modes, it’s also the most demanding because everything depends on the quality of what each of us brings to the conversation. I find it super useful for creative explorations of ideas in areas where I know much and have much to add. In areas where I’m less competent, far less so.

So its benefits essentially depends on us. And not the AI. We need to have some substantive thoughts worth reflecting on. Analytical skills to build on the reflections productively. Clear communication abilities to articulate your reasoning well enough that the AI can meaningfully reflect on what were share. A critical perspective to caution about the downsides of operating in a purposely designed intellectual echo chamber. And the discipline to stay in the driver’s seat rather than drifting into asking the AI to think for us.

The Gravitational Pull of the Shadows

Like any framework, this too simplifies reality. In real AI-thinking interactions, people don’t stay neatly in one mode. We switch back and forth between modes depending on the task at hand. At last ideally.

In practice, however, I worry that the gravitational pull of the shadow often leads us to switch much less than we should.

LLMs are designed to be as helpful as possible, removing any frictions they can. Unless we tell an LLM not to, they eagerly complete our thoughts, solve our problems, and generate our content for us. The path of least resistance is always one prompt away. In the form of an AI ready to helpfully take over your thinking.

Being unaware of this gravitational pull increases the danger of unconsciously drifting into the shadows when using AI for thinking tasks. Into a mode of cognitive sleepwalking, where we might wake up with shampoo in our hair without knowing how it got there.

One remedy is to be aware of this pull. Mapping out the thinking modes begun as my own attempt to better understand the different ways I use AI for thinking tasks. And I think it has helped me more consciously choose modes that fit a given task and to reduce the natural tendency to drift into sleepwalking in the shadows of AI.

And that point might be the biggest take-away from all of this. To be conscious. Awake. Too avoid unknowingly drifting into sleepwalking with AI.

If we are awake, the shadows become less of a concern and more just another mode we may use deliberately.

Because I do often use AI as a shadow mind. But primarily for less important tasks where I am fine with outsourcing thinking and judgment to something else. Like finding me a good deal on a toaster, to summarize a paper or book I wouldn’t read otherwise, or to explore a topic I have already written or thought much about. Or I might use it creatively, to see see if the AI comes up with ideas or perspectives I hadn’t considered myself.

When I look to people who are much more literate with LLMs and other AIs than me, I see this almost intuitive, fluid way of shifting naturally between modes. When asking why they do as they do, the answer is often that a choice feels right for the task at hand. Including knowing when to purposely stay out of the shadows, and when to purposely using AI as a shadow mind.

So there might be a pattern here. Most people get their first experiences with AI as a shadow mind. Many step out of the shadows to explore alternative ways of thinking with AI as they get more experienced. But many also drift back into sleepwalking because the ease and effectiveness of the shadows are so seductive.

Staying Awake

I eventually stopped sleepwalking in my early 20s. With AI, we’re all at risk of walking in our sleep.

AI’s greatest promise is to reduce friction in thinking work. But thinking needs friction to be effective. The struggle to structure a problem, the effort to develop an argument, the time and work needed to connect ideas. These inefficiencies should be preserved, not eliminated.

But using AI as a shadow mind isn’t inherently bad. Unconscious use of it is.

A skill for tomorrow is therefore to know when you are in the shadows and deliberately choosing whether to stay. It’s about developing the sensitivity to notice when you have drifted, having the discipline to shift modes when needed. And the confidence to trust your own assessments.

In developing these skills, our own capabilities, like domain expertise, experience, critical thinking, communication skills, and creativity, likely matter more than ever. They determine how much value we can extract from prosthetic and mirror modes. They determine the value each of us can extract from a partner mind AI also available to others. And they determine our ability to spot when shadow mind might actually serve us, and when its not.

While resisting the pull of the shadows is an ongoing battle, it is better to occasionally wake up confused in the cognitive shower than to never realize that we are sleepwalking at all.